Technical Blog

Azure Storage Can’t Handle High-scale Workloads

Does Azure have what it takes to handle your storage workload?

We know that Azure provides a limitless range of storage solutions but are they really capable of handling the high workload required by modern applications?

First let’s discuss IOPS and Throughput in terms of storage…

- IOPS stands for Input/Output Operations Per Second; this is the number of requests (read/write/sequential/random) your application sends to your storage disks in one second.

- Throughput is a measurement of how much data is transferred to and from the storage device per second, this tends to be measured in MB/s.

Now that we know about IOPS and Throughput. How does Azure handle high IOPS workloads and Throughput requirements?

You want a solution that can reliably and securely manage the large amount of data and requests you might throw at it with no issues. Another key point to remember is when dealing with a high workload environment, you want to maintain low latency.

Storage Optimised Virtual Machines (VMs)

As detailed in Cloudbusting | The Cloud is More Expensive! picking the right VM family and size is important. The storage optimised VMs are great for data warehousing, large transactional databases, big data and many more cases because they’re a powerhouse when dealing with high disk I/O and throughput.

- Lsv3-series: These VMs have locally mapped NVMe storage, for every 8 vCPUs/64GB memory, a 1.92TB NVMe SSD device is allocated, with each disk having 400,000/2000 Read IOPS/MBps; larger sizes stripe the disks to deliver increased performance This is a very capable virtual machine solution for those I/O intensive environments.

- For example, let’s take the Standard_L80_V3 VM size from this series. This VM offers 80vCPU/640GB memory, 10×1.92TB NVMe SSD at 3.8M/20000 Read IOPS/MBps. You can then attach two 128GB Ultra disks and create a striped disk to give you a single storage volume of 256GB with 76800/8000 Read IOPS/MBps and still not reach the IOPS limit for data disks on that VM.

Ultra Disks

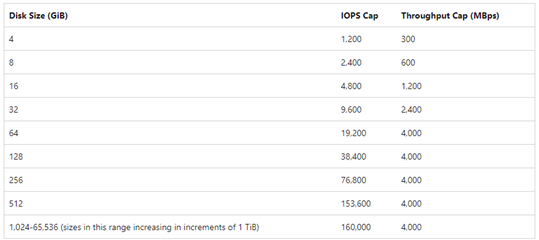

This is the highest-performing disk storage option, disk sizes range from 4GB to 64TB. The larger the size of your Ultra Disk, the higher your IOPS and throughput limits.

- The 4GB ultra disk supports up to 1200 IOPS and 300 MBPS throughput, whereas the 64 GB ultra disk supports up to 160,000 IOPS and 4000 MB/s throughput.

Ultra Disks have limitations, such as not supporting disk encryption and not currently being supported by Azure Backup. If this makes them unsuitable, Azure has another disk option that was also designed for I/O intensive environments, Premium SSDs.

Premium SSDs and Disk Striping

Premium SSDs really focus on providing a cost-efficient solution for maintaining high performance.

- Premium SSDs also come in several sizes to meet different needs. These range from P1 to P80; P1 has 4 GB of disk space with 120 IOPS per disk and a throughput of 25MBPS, while the largest P80 disk size allows 32,767 GB of disk space with 20,000 IOPS and 900 MBPS of throughput.

- These disks are great for SQL servers, Oracle, Mongo DB, big data/analytics, SAP; the list goes on.

Disk Striping. What is it?

This is the process of bringing multiple disks together to behave as one large disk, removing the performance limitations of one singular disk and increasing your environment’s I/O and throughput limits.

- Upgrading your virtual machine isn’t always the only option to meet increased I/O and throughput demands. With disk striping, you can combine two 2TB Data Disks (P40 disk) to make one 4TB volume with doubled 15,000 IOPS and 500 MB/sec. A more cost-efficient solution compared to upgrading the Azure disk tier or VM (up to the max uncached data disk IOPS/Throughput limit of your VM).

- You can stripe disks together using “Storage Spaces” on Windows machines, which makes the process of disk stripping more user friendly, allowing you to stripe two or more drives together on your VM using the GUI or PowerShell. If you run out of storage, it’s as easy as just adding another drive to your storage pool.

Azure Storage

Any data storage scenario you have, it’s likely Azure Storage has a service that fits perfectly into your infrastructure to solve this. There are four different services, each of which considers high availability, scalability, security and accessibility for your data. They all allow for large amounts of data to be stored within the cloud, with the limits being linked to the storage account with a max capacity of 550TB of space. You’re also not limited to 1 storage account, Azure Storage has a limit of 250 storage accounts per region, per subscription.

Azure Blob – A highly scalable storage solution for unstructured data. There are a range of scenarios where you could use blob storage, such as storing files for distributed access, storing data for backup and restore, disaster recovery and archiving, storing data for analysis and many more scenarios.

Azure Files – Do you need a file server? Azure Files is a fully managed cloud file share; these can be used for sharing data between applications, lift-and-shift, and can be used as an ideal replacement for a file server.

Azure Queues – Azure’s solution to storing large numbers of messages that can be built up when exchanging messages between your components in the cloud and on-premises. A queue may contain millions of messages, up to the total capacity limit of a storage account.

Azure Tables – Cloud solution for NoSQL datastore, storing large amounts of structured, non-relational data. You can have as many tables as you want within a storage account, and capacity is capped at the max storage capacity for the storage account.

Now that we’ve discussed some of Azure’s performance and storage capabilities when dealing with large workloads and some of the storage solutions available, Perhaps Azure can handle your storage workload.